A Guide to Growth Experimentation: Turning Experimentation into a Competitive Advantage

TL;DR

Most teams guess their way into product decisions — experimentation replaces guessing with data. This guide breaks down how to run a disciplined experimentation program that uncovers growth opportunities across acquisition, conversion, retention, and operations. You’ll learn how to identify friction, form strong hypotheses, prioritize with impact vs. effort, design experiments correctly, and build a repeatable learning system that compounds results over time.

In a market where customer expectations shift faster than product roadmaps, the companies that win aren’t just the ones that build the best features. They’re the ones that learn the fastest. Growth experimentation is the engine behind that learning. It’s how startup and established enterprise product teams uncover what truly moves the needle across acquisition, conversion, retention, and monetization.

Whether you’re building net-new funnels, optimizing an underperforming step of the journey, or trying to unlock operational leverage at scale, a disciplined experimentation program helps you ship smarter, avoid costly guesses, and generate measurable impact.

This guide breaks down how experimentation works, why it matters, and how high-growth companies use it to fuel compounding results.

What Is Growth Experimentation?

Growth experimentation is the structured process of testing ideas. These ideas come in the form of new products, new product features or marketing changes. The goal is to understand cause and effect. Instead of relying on opinions, teams run controlled experiments (like A/B tests) to measure whether a change actually improves a core metric.

At its core, experimentation is about:

Hypothesis-driven decision-making

Rapid iteration based on data

Reducing the cost of failure

Finding repeatable, scalable growth levers

You may be thinking to yourself, testing hypotheses sounds familiar. Essentially, growth experimentation is the scientific method applied to tech products to help them grow.

Why Growth Experimentation Matters

Most teams have ideas about what could improve performance. But, ideas aren’t impact. Experimentation confirms which ideas are winners and which are noise. It gives structure to finding successful levers for growth. High-growth organizations operate on insights, not opinions. A shared experimentation framework encourages curiosity, data literacy, and transparency. Optimizing existing funnels (e.g., onboarding, pricing, verification flows, checkout) often yields higher ROI than acquiring more customers. Some of the most effective changes — button copy, mobile layout shifts, error messaging, choice architecture — are not intuitive. Experiments uncover these hidden levers. Even losing tests are valuable. They help teams eliminate dead ends, refine hypotheses, and understand user behavior.

In the Lowe & Co approach, we take it a step further to not just accept a winner as the winner. We aim to understand why a winner was the winner. Answering questions like: Are there demographic preferences or psychological biases at play? This allows us to learn truthful insights with which to design excellent products and features.

Companies that run continuous experiments see:

10–40% conversion rate lifts in key funnels

Higher LTV through better activation and retention

Lower CAC by improving organic & paid efficiency

Operational savings through simplified flows

Stronger cross-functional alignment

The Growth Experimentation Framework

Data Analysis

I start with identifying growth opportunities by a deep quantitative and qualitative analysis. Quantitatively, I do a funnel teardown looking for conversion drops or subpar conversion to what I’ve typically seen. There is often deeper learning when breaking traffic down into segments and cohorts. Finally, I look at how operational process data correlates to the ability of the funnel to convert.

Qualitatively, on the other hand, I like to dive into user research, session replays (with tools like FullStory), customer survey feedback, and any internal or customer support tickets. Being able to trend-analyze internal and customer support tickets will reveal many opportunities for growth even with a limited understanding of the product.

The goal of quantitative and qualitative analysis is to understand where actual consumer behavior deviates from expectations. These are our opportunities for growth.

Formulate Hypothesis

Then, the challenge is to create a hypothesis. A hypothesis at its core is testable prediction about the relationship between two variables. “If I make X change, then I might see Y result.” A stronger hypothesis connects an observation to the expected impact of making a change in a variable. “Conversion is X% at this stage and there are 5 choices a consumer can make to get through this stage. If we simplify the number of choices, we might expect a 5-10% increase in conversion at this stage.”

Prioritization

You will of course uncover many growth opportunities and create hypotheses with corresponding impact estimations. How will you decide which to do first? You will need a prioritization framework to organize the realm of possible experiments. This is the bread-and-butter of a Growth PM as this exercise is the chance to optimize business value and product economics. How? The answer is simple. What we are organizing is an Experimentation Roadmap - which is nothing more than an investment thesis. It is the time-value of money that says a dollar today is worth less than a dollar tomorrow given the ability to invest, earn interest, and become a sum larger than one dollar. Therefore, when deciding which initiatives (investments) to do first, we must take into account the cost of doing the thing (effort) and the potential upside from the thing (impact). The time-value of money dictates that we should prioritize things with lower cost but bigger upside first. This prioritization can be done in various ways ranging from simple to complex. The reality is that everything must have a rationale for priority but we cannot realistically give every growth experiment a highly complex prioritization exercise. This slows down the rapid, iterative velocity of growth experimentation which slows the speed of learning and value creation.

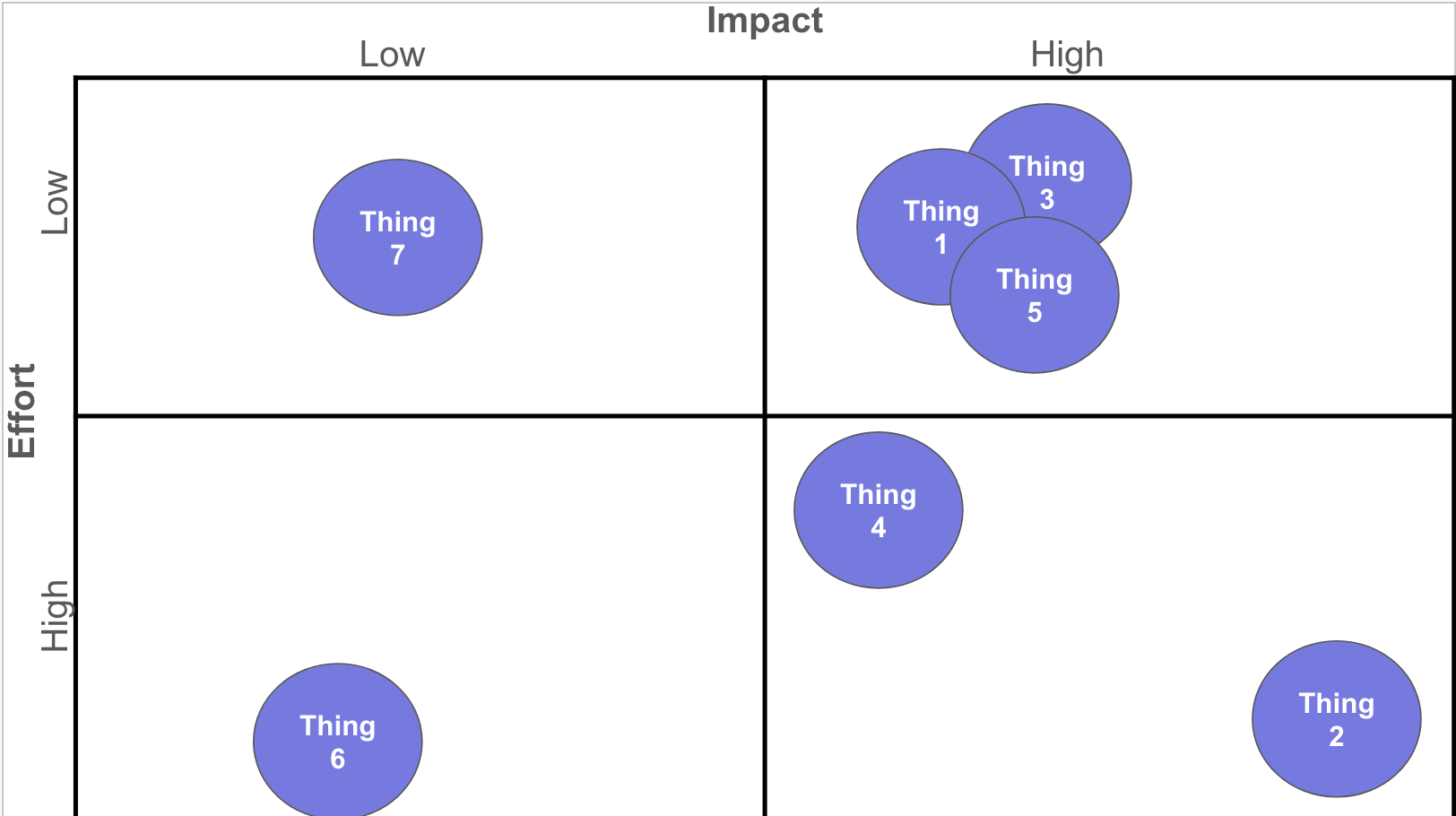

I start with a simple Impact vs Effort Matrix which helps to visualize how growth experiments correlate. Being able to place initiatives on the matrix with confidence comes with time and experience. It varies from organization to organization and from product to product. Reaching out to cross functional teams is paramount and creating this visual helps in having those conversations to either initially place initiatives or to move them around on the matrix.

Impact verses Effort Matrix

What can often happen using this simpler method is that you will see clustering of a few initiatives. It might give the impression of indifference between which clustered initiative to do first. However, if you were to dive deeper into a more complex prioritization, a leader will prevail. There are a number of more intricate prioritization methods, I like to use RICE - Reach, Impact, Confidence, Effort.

Experimentation

Once we have a roadmap (investment thesis) that is finalized, communicated and supported across the organization, the next step is to execute against it. There are a number of things to consider when running experiments including the appropriate experimentation approach (A/B Test, Multivariate, Multi-Arm Bandit, Pre/Post), primary goal metrics (defined in the impact assessment part of formulating the hypothesis), how long the experiment should run, how big the sample size should be to reach statistical significance, and instrumentation to understand if there are any unforeseen impacts of the experiment in secondary goal metrics. The task here is not to Y.O.L.O. an experiment and hope for the best. We need to be calculated to minimize disruption - to the core funnel, to the customer, and to the organization. Remember, not every experiment will be a winner, although the learnings from losing experiments are still a win. We need to monitor the experiment and ensure no detrimental impacts are seen out of the gate.

Measure, Learn, Iterate

After the test runs long enough to reach statistical significance:

Analyze results by segment

Identify downstream impacts (e.g., customers completing the funnel, funded volume, etc.)

Document findings

Feed learnings back into the roadmap

Turn winners into permanent features

Turn losers into refined hypotheses

A mature program turns this into a continuous learning loop.Teams with strong experimentation cultures are able to ship experiments on a weekly basis. Experimentation results and synthesized learnings are stored in a central repository. The organization is equipped with the experimentation and data tools to enable a successful experimentation culture and the experimentation mindset expands beyond the product and engineering teams into Compliance, Legal, Marketing and other cross-functional partners. In this type of organization, growth experimentation becomes a moat, a competitive advantage.

This is exactly the type of framework we implement at Lowe & Co Growth Advisors: helping fintechs, startups, and emerging brands build scalable experimentation systems that uncover hidden growth potential with a focus on product-led growth.